7 Crucial Web Scraping Best Practices You Must Be Aware of

In this blog on web scraping best practices, we delve into essential procedures, recommended strategies, and dos and don’ts. By understanding these fundamentals, businesses can optimize their webscraping endeavors while ensuring compliance with ethical standards.

Supercharge Your Software Development! Breakthrough IP Blocks, Conquer Website Changes, and Never Fear Getting Blocked!

“Over 82% of e-commerce organizations are now using web scraping to gather external data to help guide their decision-making, overtaking internal data collection (81%), which stands as the number one method in nearly every other sector.”- PMW

What is Scraping: Detailed Overview

Web scraping is the process of systematically extracting data from the internet, typically accomplished through automated scripts or applications. This technique involves programmatically retrieving information from web pages by downloading and parsing their content. The primary objective of web scraping is to gather targeted data from various online sources efficiently. Our comprehensive guide offers in-depth insights into the intricacies of web scraping, elucidating the mechanisms by which automated scripts navigate web pages to extract pertinent information. By utilizing this method, users can streamline the collection of data for diverse applications, ranging from research and analysis to data-driven decision-making. Understanding the nuances of web scraping is crucial for harnessing its potential while ensuring compliance with ethical and legal considerations.

Why is Web Scrapping Useful?

Automated web scraping streamlines the retrieval of public data, offering significant time savings compared to manual methods. The efficiency of this process is notably superior, making web scraping an invaluable tool for expeditious data acquisition across diverse applications. Web scraping is also beneficial for the following:

- Competitor Analysis: Conduct thorough competitor analysis by employing web scraping to monitor and extract valuable insights from rival websites, including product offerings, pricing structures, and promotional strategies for strategic decision-making.

- Social Media Sentiment Analysis: Despite the fleeting nature of social media posts, aggregating them through scraping unveils valuable trends. In cases where APIs fall short, web scraping ensures access to real-time data on sentiments, topics, and more.

- Market Research: Web scraping proves invaluable in market research, offering a powerful means to gather targeted information within specific sectors such as real estate. This tool enhances data collection for strategic insights.

- eCommerce Pricing: E-commerce enterprises can optimize pricing strategies by employing web scraping to monitor product prices across multiple platforms. This proactive approach enables businesses to identify profitable market opportunities and enhance profit margins.

- Machine Learning: As a primary data source, scraped data can significantly bolster AI and machine learning operations. Leveraging this resource can enhance the efficiency and accuracy of data-driven models.

7 Crucial Web Scraping Best Practices

Web scraping is integral for streamlined data collection, insightful decision-making, and maintaining competitiveness in the data-centric landscape. Adherence to established web scraping best practices is crucial to ensure ethical and effective online scraping methodologies.

1. Consider Website’s Guidelines

1. Consider Website’s Guidelines

Web scraping best practices are crucial for responsible data extraction. Think of a website as someone’s house, where the robots.txt file serves as guidelines for bots. It dictates which pages can be scraped, how frequently, and which are off-limits. Equally important is reviewing the Terms of Service (ToS) – a contractual agreement between you and the website. Some ToS explicitly prohibit scraping, and violating these policies can have consequences. While not always legally binding, disregard can lead to negative outcomes. A cardinal rule: refrain from extracting information behind logins, especially on social media platforms, as this poses serious legal risks, as evident in past lawsuits. Adhering to these principles ensures ethical web scraping practices.

2. Discover API Endpoints

Modern websites prioritize user experience across various devices using client-side rendering, employing JavaScript to directly render HTML in browsers. This, however, introduces challenges for web scraping due to features like unlimited scrolling and lazy loading. On a positive note, many interactive sites utilize backend APIs to fetch elements, presenting an opportunity. JavaScript orchestrates content delivery in .json format, often via a concealed API even if undocumented. Extracting data from JavaScript-driven websites typically involves loading JavaScript and parsing HTML. However, for more efficient data retrieval and reduced bandwidth usage, consider reverse engineering the API endpoint through request inspection. GraphQL endpoints are particularly advantageous for handling extensive datasets on dynamic websites, offering an effective solution for structured data extraction. Mastering these techniques ensures adept handling of the evolving landscape of modern web development.

3. Rotate IP Address

Efficient web scraping requires swift connection requests, yet websites deploy various measures like CAPTCHAs, request limitations, and IP address restrictions to thwart scraping activities. IP rotation serves as a solution, facilitated through proxies. Opt for a rotating proxy service to dynamically switch proxy IPs with each connection request, ensuring a smoother scraping process. While sticky sessions maintain the same identity across multiple requests, they should be used judiciously, depending on your specific requirements. It’s crucial to note that some cloud hosting services prohibit IPs from data center proxies, necessitating the use of residential addresses as a viable alternative. Adhering to these web scraping best practices, particularly in IP management, enhances efficiency and helps navigate around potential obstacles imposed by anti-scraping technologies.

4. Use Proper Scraping Methods

Implementing responsible and effective data extraction requires adopting suitable scraping strategies. To prevent server overload, maintain a reasonable scraping pace. Respect the guidelines outlined in a website’s robots.txt file and incorporate delay measures between requests. Leveraging web scraping frameworks and libraries enhances the overall efficacy and efficiency of the extraction process. These tools not only streamline the development of scraping procedures but also provide functionalities like handling request delays and managing concurrent connections. By combining a considerate scraping pace, adherence to robots.txt guidelines, and the utilization of robust frameworks, you ensure a responsible and optimized approach to web scraping, promoting smoother interactions with target websites while avoiding potential disruptions or inconveniences.

5. Robust Error Handling

Effectively managing errors during web scraping is imperative to mitigate disruptions like HTTP errors or connection timeouts. Implement robust error-handling procedures by anticipating potential issues. Identify common problems, such as server faults or network timeouts, and devise effective strategies to address them. Employ retry mechanisms to reattempt failed requests, ensuring continuous data extraction and swift resolution of short-term problems. Set realistic limitations to prevent excessive retries. Design error-handling systems with minimal data loss, incorporating checkpoints to record scraped data. This allows resumption from the last successful point in case of an error, eliminating the need to restart the process. Establish a comprehensive logging system to document errors and issues encountered during scraping. This not only simplifies troubleshooting but also provides valuable insights into potential problems or emerging patterns. These practices underscore the importance of meticulous error management for seamless and reliable web scraping processes.

6. Use a Headless Browser

A headless browser, devoid of a graphical user interface, is employed for efficient web scraping. Unlike standard browsers, it omits the rendering of visual elements such as scripts, photos, and videos. In scenarios where web content is rich in media, employing a headless browser expedites the extraction process. Traditional browser-based scrapers, rendering all visual components, may be time-consuming when dealing with multiple websites. Headless browsers, however, directly scrape content without rendering the entire page, circumventing bandwidth constraints, and enhancing the speed of web scraping. This approach optimizes resource utilization, allowing for swift and streamlined data extraction while mitigating the unnecessary loading of visual content. Utilizing headless browsers aligns with web scraping best practices, prioritizing efficiency, and resource management in the extraction of information from diverse online sources.

7. Make Your Browser Fingerprint Less Unique

Websites employ diverse browser fingerprinting methods to track user behavior and gather personalized data for targeted content delivery. When accessing a website, your digital fingerprint, encompassing details like IP address, browser type, operating system, time zone, extensions, user agent, and screen dimensions, is transmitted to the server. Suspicious fingerprint-based behavior can lead to IP address blocking by the server, hindering scraping efforts. Mitigate browser fingerprinting risks by utilizing a proxy server or VPN. These tools conceal your genuine IP address during connection requests, preventing detection and safeguarding your computer’s identity. A VPN and proxy services offer an effective shield against the implications of browser fingerprinting, enhancing privacy and enabling secure data extraction without the risk of being flagged or blocked by target web servers. Employing these measures aligns with web scraping best practices, ensuring a discreet and unimpeded scraping experience.

Typical Challenges When Web Scraping

Experienced web scrapers often face challenges when extracting data from specific websites. Understanding common mistakes and employing recommended methods is essential for successful data retrieval in web scraping endeavors.

- Honeypot Traps: Certain websites employ advanced tactics, such as honeypot traps featuring invisible links detectable only by bots. These links, concealed through CSS properties or camouflaged within the page’s background color, trigger automated bot labeling, and blocking when discovered and clicked, posing challenges for web scrapers.

- AJAX Elements: Websites utilizing AJAX (asynchronous JavaScript and XML) load data dynamically, enabling server communication without page refresh. This approach, common in endless scrolling pages, poses challenges for scraping, as data appears post-HTML loading. Successful scraping requires JavaScript execution and rendering capabilities to retrieve data from websites employing this technique.

- CAPTCHAs & IP Bans: CAPTCHA, or the Fully Automated Public Turing Test, distinguishes between computers and humans online. Familiar formats include checkbox verification, image recognition, or typing distorted characters. Failing these tests restricts access. IP tracking and blocking, a common anti-scraping method, utilizes IP fingerprinting and logs browser data to identify and ban bots.

Overcome Web Scraping Challenges with NextGen Invent

Businesses leverage web scraping to enhance their processes, though it comes with challenges. Anti-scraping technology poses obstacles necessitating innovative solutions. Efficiently extracting large datasets demands strategic control. Managing latency, adapting to structural web page changes, and handling dynamic content are additional hurdles. Ethical considerations, including respecting website owners’ rights, add complexity. As a custom software development services company, we know that understanding and addressing these challenges is essential. By staying informed and proactive, businesses can navigate potential issues, ensuring responsible and effective use of technology for data extraction. A comprehensive approach to web scraping not only maximizes the benefits for business processes but also upholds ethical standards and legal considerations in the digital landscape.

NextGen Invent, an agile software development services company, provides diverse webscraping services for establishing robust and efficient scraping infrastructure. Connect with us today to explore our offerings and discover how we can cater to your specific web scraping requirements with precision and reliability.

Related Blogs

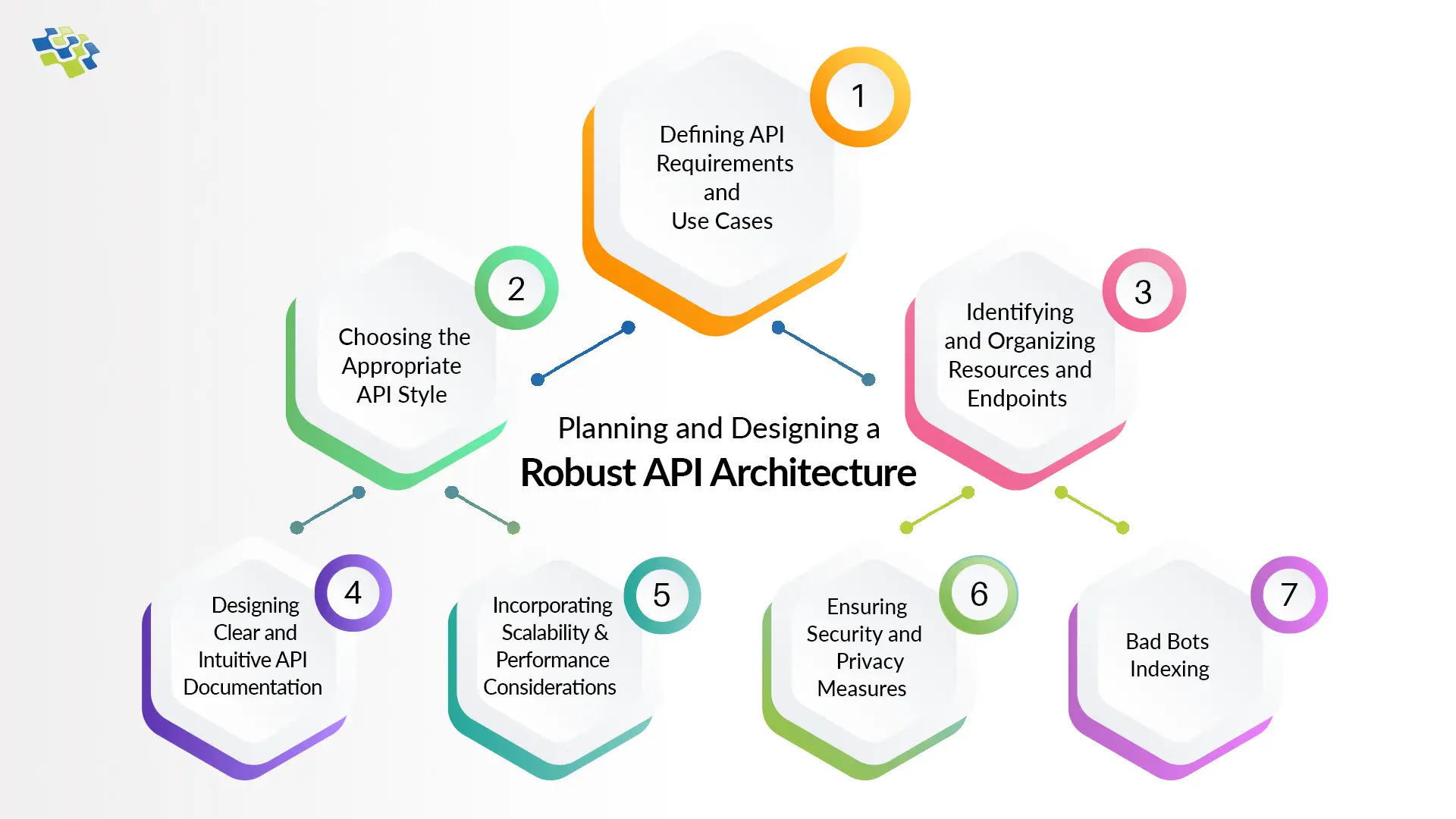

How to Build a Robust API Architecture?

APIs play a crucial role in connecting modern technology with commercial ecosystems. With API architecture, businesses can leverage data monetization, forge valuable partnerships, and unlock new avenues for innovation and expansion.

5 Android Frameworks for Faster App Development

Today, mobile apps have become an integral part of our daily lives, profoundly impacting our routines, work habits, and study patterns. In this guide, we will delve into the top 5 widely used tools for developing Android frameworks.

A Step-by-Step Guide for Mobile App Development

In today’s world, smartphones have become ubiquitous, transcending geography and industries. It is universally acknowledged that engaging with consumers through mobile devices is the most effective way to capture their attention, generate interest in a brand, and drive sales.

Stay In the Know

Get Latest updates and industry insights every month.

1. Consider Website’s Guidelines

1. Consider Website’s Guidelines